Ugh. Ok, so I want to run some deep learning algorithms on a GPU. I haven’t touched Google Cloud Platform (GCP) in about two months – so I don’t remember how to create an instance, connect to my instance, or get my image files onto my instance. In order to avoid this two months from now, here are some notes. You’re welcome future Frank 🙂

First, go to the GCP Console… and in the Navigation Menu see if you have any instances running (hopefully not, because you have to pay for these and you’re not hosting anything at the moment). Looks like no instances, good.

Let’s do our work from the terminal for which you’ll need the Google Cloud SDK installed on your laptop. You may have recently re-imaged your laptop… which means you’ll need to reinstall gcloud (the command line SDK). Download the google cloud sdk zip file and unzip it in your home directory. Then run this in terminal:

./google-cloud-sdk/bin/gcloud initIf you run into issues at some point, here’s the link to the SDK quick start: https://cloud.google.com/sdk/docs/quickstart. Assuming everything is good (and you say Ok to modifying the path, etc.)… run this:

./google-cloud-sdk/bin/gcloud initIt will ask you to log into your Google account. For some reason this was sort of a hassle, but after 2-3 attempts it worked (when I switched my default browser from Safari to Chrome, that seemed to do the trick). Then I picked a preexisting project (frank-runs), but did not configure a default Compute region / zone (I do that next).

From here, right off the bat I tried to create an instance ($ gcloud compute instances create) and hit this error:

-bash: gcloud: command not found

So I tried this (./google-cloud-sdk/bin/gcloud compute instances create) instead and it worked (note to self to understand why this works!!! The oddest part is that after I closed and opened Terminal all I needed was $gcloud to run Google Cloud commands).

Create a Compute Instance

export IMAGE_FAMILY="pytorch-latest-gpu" # or "pytorch-latest-cpu" for non-GPU instances

export ZONE="us-west1-b"

export INSTANCE_NAME="my-fastai-instance"

export INSTANCE_TYPE="n1-highmem-8" # budget: "n1-highmem-4"

gcloud compute instances create $INSTANCE_NAME \

--zone=$ZONE \

--image-family=$IMAGE_FAMILY \

--image-project=deeplearning-platform-release \

--maintenance-policy=TERMINATE \

--accelerator="type=nvidia-tesla-p100,count=1" \

--machine-type=$INSTANCE_TYPE \

--boot-disk-size=200GB \

--metadata="install-nvidia-driver=True" \

--preemptible

Another error…

ERROR: (gcloud.compute.instances.create) Could not fetch resource: - The resource 'projects/frank-runs/zones/us-west1-b/instances/my-fastai-instance' already exists

What?!?! I thought I didn’t have any instances running. 🤔 Ahh, Ok. When I looked originally I looked in Navigation Menu -> App Engine -> Instances. What I should have done was Navigation Menu -> Compute Engine -> Instances and I would have seen the living my-fastai-instance that I used for the FastAI videos. So I fired up (‘started’) the instance in the GCP Console. Now try to connect via the Terminal:

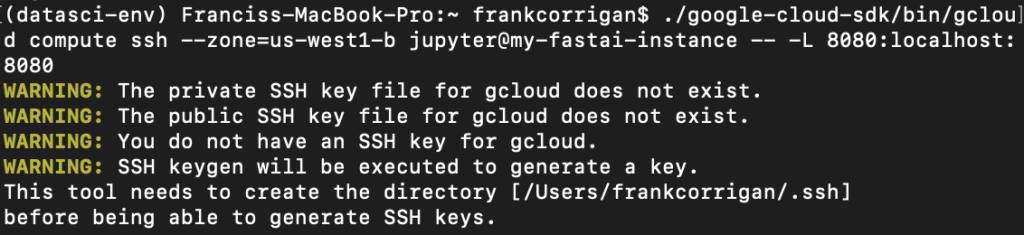

gcloud compute ssh --zone=us-west1-b jupyter@my-fastai-instance -- -L 8080:localhost:8080

./google-cloud-sdk/bin/gcloud compute ssh --zone=us-west1-b jupyter@my-fastai-instance -- -L 8080:localhost:8080

Pull my hair out, no ssh keys set up since I re-imaged my laptop.

SSH Keys

Not a huge deal. I set up ssh keys on my laptop and, luckily, I remembered my instance password from 2 months ago. Never forget your password… ever.

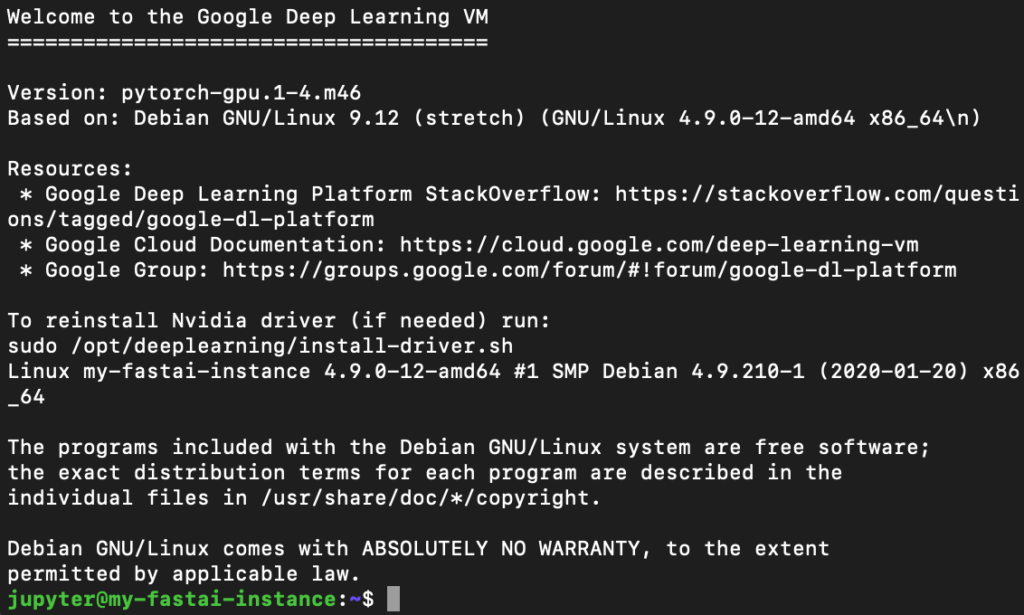

After setting up the ssh key, I am able to connect to the instance and Jupyter:

In Chrome, go to http://localhost:8080/lab? and now you are off and running in Jupyter labs (on your instance of course).

Transfer from Instance to Laptop and Vice Versa

The notebook and images I want to use are not on the instance – they are on my local machine. So let’s transfer them:

Local to Instance

# https://cloud.google.com/compute/docs/instances/transfer-files#transfergcloud

gcloud compute scp local-file-path jupyter@my-fastai-instance:~

Also, where it says local-file-path… no quotes or anything like that… just the path. For example, if the path is /Users/frankcorrigan/Repositories/deep-learning then the whole command is…

gcloud compute scp /Users/frankcorrigan/Repositories/deep-learning jupyter@my-fastai-instance:~

Then going the other way – instance to laptop – would be like this…

Instance to Local

gcloud compute scp --recurse instance-name:remote-dir local-dir

gcloud compute scp --recurse my-fastai-instance:/home/jupyter/.fastai/gcp_sub_1.csv /Users/frankcorrigan

Other Notes Along the Way

On the compute instance itself, find Custom metadata option and click Add Item and type…

startup-script

sudo ufw allow ssh

I appreciate FastAI for their helpful walkthrough tutorial.

Note of Cost

I ran a DCGAN (in Jupyter) for ~5 (actual) hours and it cost me ~$5.